Let's talk about something that's quietly reshaping the world . If you've asked ChatGPT a question, been stunned by an image from DALL-E, or watched an AI-generated video, you've witnessed a kind of magic. But this magic isn't an overnight trick. It's the result of a decades-long technological revolution that is fundamentally changing how we create, communicate, and even think.

This is the AIGC - Artificial Intelligence Generated Content - Revolution.

From the early statistical models of the 1950s to the mind-bending power of today's large language models, the journey has been one of exponential growth. But how did we get here? What are the key breakthroughs, the "aha!" moments, that brought us from simple text generators to AI that can write code, compose music, and design art?

In this comprehensive post, we'll trace that exact history. We'll start with the simple definitions for beginners, journey through the "deep learning" breakthroughs for intermediate readers, and finally, dive into the advanced architectures that power the revolution today.

What is AIGC?

AIGC, or Artificial Intelligence Generated Content, simply refers to digital content - like images, music, articles, and code - that is created by an AI model rather than by a human.

Think of it as an incredibly skilled apprentice. You provide an instruction (a "prompt"), and the AI uses its vast training to generate something new. The core mission of AIGC is to make content creation faster, more accessible, and more efficient.

The Drivers of Modern AIGC

This two-step idea isn't new. So, why the sudden explosion? The difference between today's AIGC and older models lies in three key drivers:

- Massive Datasets: GPT-3 was trained on 570GB of text data, a colossal leap from GPT-2's 38GB. More data means the AI learns a more comprehensive and realistic "map" of the world.

- Bigger, Better Models: We are building more sophisticated "foundation models" (the "brains" of the operation).

- Immense Compute: We now have the specialized hardware (like GPUs and TPUs) needed to actually train these massive models.

The current flagships of this new era are models like ChatGPT (specialized in conversation),

DALL-E 2 (a master artist for text-to-image), and Codex (a programmer that speaks human language).

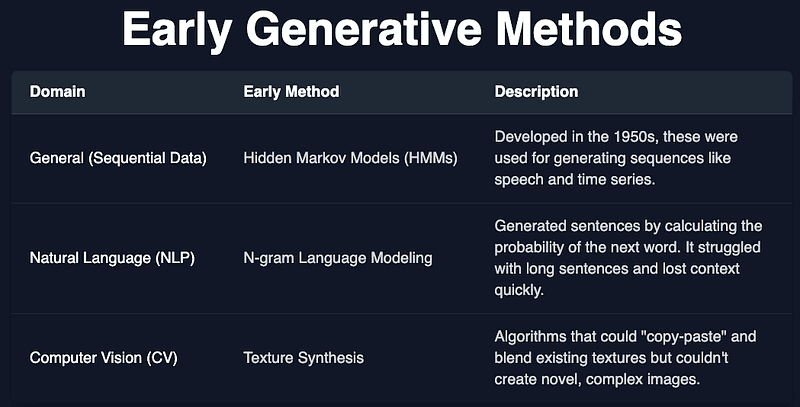

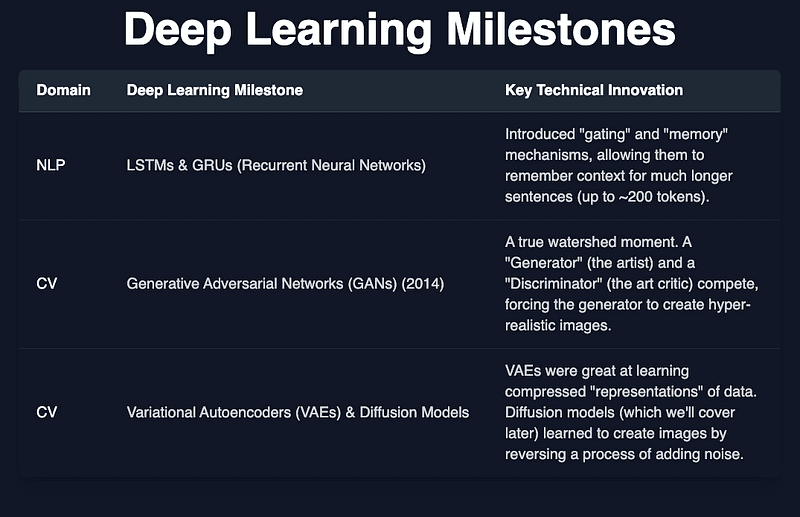

A Comprehensive History of Generative AI

To understand today's revolution, we have to look back. The history of generative models can be split into two major eras.